Author

Author

|

Topic: Augmented reality game to save the world (Read 46166 times)

|

Zanthius

Enlightened

Offline Offline

Posts: 941

|

Yeah, because they might detonate the Tsar Bomba once over a deserted region and then scrap the project soon after? Because that is what the "dictatorship" that actually had a Tsar Bomba actually did with it. How irresponsible of them.

Well, even if it was detonated in a deserted area, people might still argue that was irresponsible. All the nuclear tests in the 50s and 60s had quite an effect on the concentration of C14 in the atmosphere:

And that the cold war didn't turn into a nuclear war could have been just be a matter of luck. A 5 year old kid might also not have done anything stupid with a Tsar Bomba, simply because of luck. Anyhow, killer robots can potentially move from any deserted region you introduce them to, to any populated region on Earth. Nuclear weapons and killer robots are very different types of weapons.

That does not follow, since there is always some group in society people will see as criminals and potentially want surveilled. Rapists, murderers, thieves, drug traffickers, gun traffickers, people traffickers, killer robot engineers. Unless you advocate for total anarchy where there are no crimes and no criminals, there will always be many cases where surveillance of some people by authorities can be justified to the populace.

Sure, but at least here, there are very strict rules regarding who the police are allowed to surveil, and if the police have been found to do illegal surveillance, they can be punished just like any other criminal. We can also punish the government if they are involved in illegal activities. One of the problems with dictatorships is that the police and the government are above the law.

Unless you feel that someone labeled a "dictator" by your media

The world is just flowers. There are no dictators and malicious people in the world. How much have you traveled? Have you lived for long periods of time outside the US? I have been to Russia multiple times. I have a close friend that lives in Moscow. I also have a friend from Ukraine. He wasn't very surprised when Russia invaded Crimea.

People with high cultural intelligence, know that people can think and experience the world very differently in different countries, because of different traditions and different educational systems. We are not all the same.

|

|

|

|

« Last Edit: September 19, 2018, 07:14:35 pm by Zanthius »

|

Logged

Logged

|

|

|

|

Deus Siddis

Enlightened

Offline Offline

Gender:

Posts: 1387

|

Well, even if it was detonated in a deserted area, people might still argue that was irresponsible. All the nuclear tests in the 50s and 60s had quite an effect on the concentration of C14 in the atmosphere:

Both the US and USSR were doing that. "Dictator" Harry Truman even tested two nuclear weapons on Japanese cities. Or wait no that was a democracy that did that.

Anyhow, killer robots can potentially move from any deserted region you introduce them to, to any populated region on Earth. Nuclear weapons and killer robots are very different types of weapons.

Actually ICBMs are nuclear armed killer robots that do exactly that. We have had them for decades and are all still here to tell the tail.

Sure, but at least here, there are very strict rules regarding who the police are allowed to surveil, and if the police have been found to do illegal surveillance, they can be punished just like any other criminal. We can also punish the government if they are involved in illegal activities. One of the problems with dictatorships is that the police and the government are above the law.

As you pointed out earlier, judging laws without taking into account how thoroughly they are enforced is rather pointless.

The world is just flowers. There are no dictators and malicious people in the world. How much have you traveled? Have you lived for long periods of time outside the US? I have been to Russia multiple times. I have a close friend that lives in Moscow. I also have a friend from Ukraine. He wasn't very surprised when Russia invaded Crimea.

It is great that you are an accomplished tourist but I think you have missed my point. I was using the word "you" not to refer to you personally, but in the same general sense that you were using it, to refer to the average human being (and more specifically their mindset).

You claimed that with universal acceptance of a modern western definition of human rights, people would not be motivated to develop new weapon systems since such things could be used to harm the rights of others. But this whole time you have been making an excellent case for how such weapons development can be justified to people anyway -- to defend against dictators (who may or may not themselves be developing such weapons).

"Some dictator somewhere in the world is developing killer robots (maybe). We must develop this technology also to defend ourselves or destroy this person before he does too much harm."

Exactly this kind of rhetoric can and has been used to justify ("humanitarian") military invasions and advanced weapons programs like armed drones.

|

|

|

|

|

Logged

Logged

|

|

|

|

|

|

|

|

|

|

Deus Siddis

Enlightened

Offline Offline

Gender:

Posts: 1387

|

Think more of an AI that is a military general. This would be advantageous since the AI general is capable of analyzing much more data much faster than any human general. If the AI general also has access to drones and ICBMs, it can also accomplish things much faster than by giving orders to human soldiers.

If either China, Russia or the US decides to make an AI general, they will have a much better general than the other superpowers. So they are probably all working on making AI generals.

Also, why not make a fully automatic factory producing drones and controlled by the same AI? Then the AI general can produce as many drones as it needs. They are probably already experimenting with such systems in China.

Now let's build a few fully automatized aircraft carriers filled with tons of drones, but without any people. Get yourself a few such aircraft carriers, and you can have the AI general waging war all by itself. This is maybe 10 years from now.

This is effectively a whole new topic that dwarfs and supersedes (no pun intended) your original topic. In this scenario the question becomes what is the evolutionary advantage of a civilization that keeps humans around at all?

If you have machines that do everything a human can do, but better, then the civilization that has the smallest human population and thus wastes the least resources keeping (these no longer useful) humans alive will out compete the rest in time. Ultimately, the civilization with ~0 humans will prevail.

Assuming machine learning is not the same as strong artificial intelligence you could also see a less dramatic evolutionary path for human civilization where we are still useful for the highest levels of research and development of new technologies. In that case, we should expect to see the evolutionary plateau be a civilization where only people with an IQ of over X still exist and they almost all are involved in technological innovation. Effectively, automation replaces humans at increasingly less mundane tasks until it reaches a point where it can no longer be improved or improved quickly and then everyone still around gets to keep having a "reason to exist" (from a cold, natural selection viewpoint).

In both cases there is also the possibility of some remaining random humans living like and with wild animals in the few parts of the world not worth exploiting by the automated civilization.

|

|

|

|

|

Logged

Logged

|

|

|

|

Zanthius

Enlightened

Offline Offline

Posts: 941

|

This is effectively a whole new topic that dwarfs and supersedes (no pun intended) your original topic. In this scenario the question becomes what is the evolutionary advantage of a civilization that keeps humans around at all?

The point of a human life obviously isn't just to be an efficient worker. There is something very different about creating machine learning algorithms for peaceful purposes and creating machine learning algorithms for war. If all our machine learning algorithms are just for peaceful purposes we might end up being "useless" in the conventional sense, but that doesn't necessarily mean we should cease to exist. We just need to "reinvent" ourselves and find a different purpose. Machine learning algorithms that are created for destructive purposes, might not just make humanity become "useless", they might actually wipe us out. Then it won't necessarily be possible for you to "reinvent" yourself and find a different purpose.

The next decades are going to be extremely challenging no matter what, and we cannot rely on idiots like Donald Trump to guide us through this difficult period. People such as Bret Weinstein and Yuval Noah Harari might be able to guide humanity through this difficult period. But they cannot necessarily take over the old political world simply by making youtube videos. We need something like this augmented reality game to engage people in saving the world. Maybe we could involve Bret Weinstein and Yuval Noah Harari in making this game, and make them into the "bosses" which give orders to the players. So, for people playing this game, they are gradually going to develop a stronger concept of Bret Weinstein and Yuval Noah Harari as their true leaders.

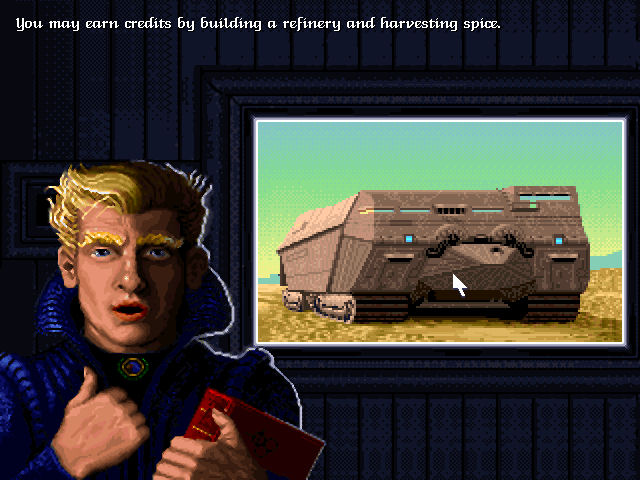

You remember the mission briefings for example in the game called Dune 2:

Well, in this game, Bret Weinstein or Yuval Noah Harari could be the guys giving you mission briefings.

We would of course also need some proficient software engineers to make such a game. I guess there should be many such people here. Wouldn't this be much more fun than working on and/or playing completely fictional games, which don't necessarily have any impact on the real world? Especially since the real world is in such a critical phase now.

|

|

|

|

« Last Edit: September 20, 2018, 03:42:32 pm by Zanthius »

|

Logged

Logged

|

|

|

|

Deus Siddis

Enlightened

Offline Offline

Gender:

Posts: 1387

|

The point of a human life obviously isn't just to be an efficient worker.

From a rational, Darwinian standpoint, the "purpose" of human life is to survive by remaining competitively adapted to our changing environment. If the civilizations we started becomes so adaptive and competitive autonomously that they no longer benefit from having us around, then we will have become a vestigial feature that will ultimately disappear due to natural selection continuously preferring those civilizations that do not burden themselves with us.

This is why development of "peaceful" automation is actually tremendously more dangerous to the survival of our species than warfare automation.

By itself, Military Automation can only cause harm until it runs itself out of the vital resources humans will no longer want to provide it with or killed enough of us and our infrastructure that we no longer can provide for it. It burns itself out almost like a virus.

In contrast, by itself, Infrastructure Automation has no limits on how long it can run without us. It could continuously rebuild itself, reinvent itself and not appear so threatening as it really is until we had long given over to it too much control of resources for us to be able to reverse the process. It could then decide to simply convert our food growing croplands into bio-fuel or bio-plastics producing croplands. Or set up orbital solar collectors that it develops to the size of continents, thereby reallocating the natural source of energy we earthly organisms depend on, to its own projects. And if we did actively retaliate against it, whatever civilian type security systems it did not already have could be developed quickly to deal those of us that damage its infrastructure.

|

|

|

|

|

Logged

Logged

|

|

|

|

|

|

Deus Siddis

Enlightened

Offline Offline

Gender:

Posts: 1387

|

A machine learning algorithm doesn't necessarily care anything about if it benefits from anything. It cares about doing whatever it is set to do as efficiently as possible.

Exactly the same can be said for almost all organisms. Bacteria do not "care" about anything either (as far as we know) they are just biological machines following their genetic programming. But that genetic programming mutates over generations and is directed by natural selection to do things in ways you would not have guessed by looking at their earliest ancestors billions of years ago. (That is the same kind of thing the article you linked to earlier was claiming, that the negotiation machine learning experiment had mutated its behavior to create something the human engineers had not anticipated -- a language of its own.)

What you can predict about this process though, is that over the long term, survival of the fittest will direct the evolution of an organism or civilization towards being as competitive and adaptive as it can be.

Why do you think that we need to provide for a military automation?

Only if we outlawed "peaceful" automation of infrastructure would that be the case. Almost certainly this will not be the case of course. But if your goal is to "save the world", as your topic title suggests, then this is exactly what you would need to be advocating for.

You are projecting. You are talking about a machine learning algorithm, not a human.

I should say again that while in the darkest possible scenario, an automated civilization would have no human component at all, in a nearly as dark scenario, one of your dictator types or a very tiny elite could run an entire civilization with no other people in it, thanks to automation of infrastructure. Then it really would be a human(s) making the biggest decision and not a machine learning algorithm.

But this elite would not even need killer robots, they could simply reorganize the infrastructure to no longer feed anyone but themselves. It could be very much like how France has nearly exterminated the local European Hamster population by converting croplands from growing cabbages to growing maize.

|

|

|

|

« Last Edit: September 20, 2018, 06:47:10 pm by Deus Siddis »

|

Logged

Logged

|

|

|

|

|

|

Deus Siddis

Enlightened

Offline Offline

Gender:

Posts: 1387

|

Machine learning algorithms don't necessarily care about their own survival unless we program them to care about their own survival. Maybe it should be illegal to make machine learning algorithms that care about their own survival and growth since such machine learning algorithms might become competitors to biological organisms.

Indeed. But this comes back to a key point you made earlier:

"Think more of an AI that is a military general. This would be advantageous since the AI general is capable of analyzing much more data much faster than any human general. If the AI general also has access to drones and ICBMs, it can also accomplish things much faster than by giving orders to human soldiers. If either China, Russia or the US decides to make an AI general, they will have a much better general than the other superpowers. So they are probably all working on making AI generals. Also, why not make a fully automatic factory producing drones and controlled by the same AI? Then the AI general can produce as many drones as it needs. They are probably already experimenting with such systems in China. Now let's build a few fully automatized aircraft carriers filled with tons of drones, but without any people. Get yourself a few such aircraft carriers, and you can have the AI general waging war all by itself. This is maybe 10 years from now."

Just the same as there are powerful incentives to make a military as smart, fast and efficient as can be to not get behind your competitors in warfare, there is very similar incentives to protect and grow your economic infrastructure. So just as you can not trust militaries of the world to maintain proper safeguards and limitations on killer robots, you can not trust the world's civil bodies to keep proper safeguards and limitations on autonomous infrastructure. The long term risk and the short term incentives are both dangerously high.

I can imagine a world where humans are only consumers, but not producers. Would that be a very dark scenario? You could sit and play fictional computer games all day, travel wherever you wanted, have sexual intercourses, use recreational drugs, and the machines would provide you with everything.

It is not dark at all if it just stayed that way. It would be a utopian paradise. The problem is only that a civilization that can produce just as much with no consumers will eventually out compete one that can produce just as much but does have consumers.

So the long term paradise is one where we live mostly hedonistic lives but still produce enough useful high level thinking beyond what machine learning can accomplish alone that it more than offsets the cost of our upkeep at a high quality of life. Unfortunately we have no control over this -- the physical laws of our universe have preset whether or not AI can be fully better than us. We are just waiting to find out which is the case.

That said, we probably have some control over how fast we develop technology in general, such that if we were all incompetent enough in how efficiently we pursued technological progress we might see a major natural disaster remove our ability to destroy our own future before we could fully utilize that ability.

|

|

|

|

|

Logged

Logged

|

|

|

|

|

|

|

|

Zanthius

Enlightened

Offline Offline

Posts: 941

|

I am thinking, maybe I could get the players to deliver garbage to my company, and then I could use the new chromatography columns I have developed to extract useful chemicals from the garbage.

|

|

|

|

« Last Edit: September 24, 2018, 11:24:41 am by Zanthius »

|

Logged

Logged

|

|

|

|

|